Mass Effect Art and the Internet in the Twentyfirst Century Douban

- Research

- Open Admission

- Published:

Mining Chinese social media UGC: a big-data framework for analyzing Douban movie reviews

Journal of Large Data volume 3, Article number:3 (2016) Cite this commodity

Abstract

Assay of online user-generated content is receiving attention for its wide applications from both academic researchers and manufacture stakeholders. In this pilot study, we address common Big Data issues of time constraints and retention costs involved with using standard unmarried-auto hardware and software. A novel Big Data processing framework is proposed to investigate a niche subset of user-generated popular culture content on Douban, a well-known Chinese-linguistic communication online social network. Huge data samples are harvested via an asynchronous scraping crawler. Nosotros too discuss how to manipulate heterogeneous features from raw samples to facilitate assay of diverse moving-picture show details, review comments, and user profiles on Douban with specific regard to a moving ridge of Due south Korean films (2003–2014), which take increased in popularity amongst Chinese movie fans. In improver, an improved Apriori algorithm based on MapReduce is proposed for content-mining functions. An exploratory simulation of results demonstrates the flexibility and applicability of the proposed framework for extracting relevant information from circuitous social media data, knowledge which tin can in turn exist extended beyond this niche dataset and used to inform producers and distributors of films, television shows, and other digital media content.

Background

The final decade has witnessed the dramatic expansion of online social networks (hereafter OSNs) at the global level. More and more than people are employing OSNs in their day-to-24-hour interval lives to access information, express opinions and share experiences with their peers. Equally a result, massive volumes of content are generated every day from numerous social media channels. A typical example is Facebook, which was recently reported to receive x million new photographs every hr [one].

A significant proportion of online content is associated with the film domain, every bit many OSNs (such as Rotten Tomatoes, FilmCrave and Twitter) provide cinema fans with user-friendly mechanisms for posting and sharing their opinions or comments about movies online. Prospective audiences are increasingly inclined to rely on online reviews to make their ain viewing choices, besides equally a list of films upon which they might comment (but not necessarily see). As a result, investigating user-generated content (hereafter UGC) to discover significant patterns generated by these online audiences is condign increasingly common [2–5]. The benefit of this blazon of inquiry is derived from several aspects. For instance, UGC analysis reveals how electronic give-and-take-of-mouth (time to come eWOM) can exist utilized equally a powerful communication tool and social networking aqueduct for spreading awareness of a given motion-picture show in both the offline and online worlds. In addition, such analysis closes the gap between the picture producer and audiences by offer a better agreement of consumers' opinions. In turn, the provision of media contents can be customized in terms of product, distribution, exhibition, and associated promotional advert. By predicting audience preferences and future behaviors, non just tin manufacture stakeholders promote their contents more than finer, but an improved user experience can be offered, and audiences can be better assisted in finding films related to their item interests.

A survey of the relevant literature reveals that investigators have devised a number of approaches to UGC analysis related to flick media [3, 4, 6–eight]. For example, the sentiment analysis applied to movie reviews promises a improve understanding of audience opinion. UGC-based prediction systems have also been proposed in relation to flick ratings and box role functioning. In improver, film recommendation systems tin be regarded every bit some other important outcome of UGC analysis. Some of these approaches are reviewed in "Related work".

All the same, regardless of the growing interest in UGC analysis in general, there has been niggling research into the potential social benefits and commercial applications of Chinese linguistic communication eWOM as a tool for developing and utilizing UGC. In addition, most existing piece of work focuses on a particular aspect of the available data (primarily picture reviews) or specific metrics (such as box office statistics or movie ratings) that are analyzed with an average single machine (i.eastward. a PC with 4 cores and 4 gigs of retentivity). These results are insufficient to provide a complete framework to cover all components of audition experience, or measure out the effects of both internal and external factors all while performing the analytical processes in a timely and efficient way. More chiefly, with the exponential accumulation of UG.C, the challenges associated with the large four "Five" problems data volume (numbers of films, users, and generated reviews), variety (different data formats), velocity (streaming annotate data), and veracity (language incertitude) go on to multiply. This presents a typical scenario for Large Information processing, which is difficult to accost using traditional analysis methods. Thus in that location is an increasing demand to develop alternative frameworks for conducting UGC assay. Our aim is to develop an efficient and practical only novel technique for investigating big Chinese-language data sets associated with audience responses to international media contents one that is superior to those available to researchers using traditional qualitative and quantitative survey or assay instruments.

With this end in view, this paper describes an innovative collection and analytical tool, termed Douban-Learning, that is, a make-name rather than a merits virtually the arrangement's intelligence, which has been designed to facilitate UGC-based information mining in the film domain. The major point of divergence that distinguishes our inventive work from conventional methods is that the proposed framework is designed to address Big Data issues associated with a reliance on a single-auto equipped with boilerplate hardware and software, while at the same time satisfying the type of costly data storage and computational requirements covered in previous studies [9, 10]. The major contributions offered hither tin be summarized equally follows:

-

An efficient framework, Douban-Learning, is implemented for large volumes of social media data processing based on the Hadoop platform. User-generated contents are collected, distributed, stored and processed on the Hadoop distributed file arrangement (HDFS);

-

An asynchronous scraping crawler is implemented via a multiple-task queue, which facilitates data collection in an efficient and simultaneous style;

-

Multiple heterogeneous features are generated to represent raw data records related to a diversity of moving picture details (i.e. actor, manager, author, and story elements typically found in English-language comments on most OSNs), movie reviews, and user profiles. A novel extraction, transformation and load (ETL) process is introduced to facilitate quantification;

-

An improved Apriori algorithm based on MapReduce is proposed to increment the flexibility and efficiency of Large Data mining.

The framework proposed hither tin can in plough inform strategies not only for producing and promoting films featuring item actors, plot elements, and locations, etc., but also for modifying or localizing stories for specific markets, regions, and target audiences.

The remainder of this paper is organized as follows. In "Related work", we briefly review some existing work for UGC assay relevant to our work. We then introduce the Douban-Learning framework in "Experimental results", where iii major modules are discussed in terms of data harvesting, characteristic generation and content mining. Our proposed framework is evaluated in "Experimental results", and in "Conclusion" nosotros offer our conclusion and advise further prospects for the organization.

Related piece of work

This section offers a brief review of state-of-the-art enquiry in terms of UGC-based analysis. Showtime, we investigate sentiment analysis, gleaned from users' comments. Nosotros then hash out the UGC-based prediction system for movie ratings or box office performance. We further investigate one existing popular application of UGC analysis: recommendation systems. Finally, some existing processing architectures and ability for mining UGC are likewise provided.

Sentiment analysis

Ane of the most important roles of UGC mining is to empathize users' mental attitude or preference via sentiment analysis. Although sentiment analysis has been a major topic of natural language processing for many years, only recently has it attracted the interest of the Web-mining inquiry community. 1 reason for this change is the increasing popularity and availability of large collections of topic-oriented information across online review sites, microblogs, and social networking sites. For our purposes, sentiment analysis is mainly applied to pic reviews in gild to extract subjective data from the comments made by particular audiences and categorize them equally positive, neutral or negative. To express the thing in mathematical terms: let \({\mathbf {x}} = \left\{ x_1, x_2, \cdots , x_n\right\}\) be a tuple representing n-textual features extracted from i review, and \(y \in \left\{ positive, neutral, negative\correct\}\) be the class label. Sentiment analysis aims to railroad train a classifier that extracts the decision rule subject to the following constraint:

$$\brainstorm{aligned} y = f({\mathbf {x}} ) + e, \cease{aligned}$$

(i)

where \(f(\cdot )\) is an unknown determination function to be estimated by the classifier, and e is the corresponding error. Essentially, textual features (\({\mathbf {x}}\)) and classification function (\(f(\cdot )\)) play the largest and near critical office in accurately determining the sentiment of a given review. A number of different textual features or classifiers have been proposed to supplement the previous studies on which our study builds.

In [3], a heuristic sentiment assay of movie reviews is proposed. Given the word class, the method explores textual features using the combination of "Adverb", "Adjective", and "Verb" for the review classification. Samad et al. provide a hybrid algorithm to determine individuals' views by combining support vector machine (SVM) and particle swarm optimization (PSO) algorithms as the classifier [half-dozen]. Two typical features, word frequency and term frequency-changed document frequency (TF-IDF), are extracted for training purposes. Experimental results show that the hybridization of SVM with PSO improves classification accuracy, compared to conventional SVM classifiers. A review summarization algorithm is proposed in [11], in which the latent semantic analysis (LSA) method is used to identify textual features, which are accessible to experts and non-experts alike because of their appearance in general sites such as the popular Internet Flick Information Base (aka IMDB) online site and mobile application. As a result, movie reviews are represented using characteristic-based summarization. A comprehensive study of moving-picture show tweets is found in [12]. Effectually ten,000 tweets are nerveless and labelled manually, and are and so each converted into a binary textual feature to railroad train an SVM classifier. Other sentiment analysis work can too exist institute in [13–15].

Prediction system

Movie revenue and box office statistics are disquisitional measurements for quantifying a moving picture's commercial success. Yet, audition reviews from raw UGC cab also offer a practicable model of predicting picture show functioning. As with sentiment analysis, Eq. (one) can likewise be used as a full general model for the prediction system. Still, the class label y now applies to the picture show acquirement rather than its sentiment.

In [4], fourteen keywords (such as "love", "wonderful", "best") are extracted equally textual features. Then a Na?ve Bayes classifier is trained to predict trends at the box office. Yu et al. propose an approach based on probabilistic latent semantic assay (PLSA) to extract sentiment factors, and 2 autoregressive models are and then implemented for prediction [7]. Experimental assay shows that positive sentiments are stiff indicators of film functioning. Similarly, in [16] the PLSA approach is once more employed to place textual features. Furthermore, a Fuzzy logic method is employed to quantify extracted features, followed by the application of a regression model for prediction. Another fuzzy-based hierarchy method is employed in [17] to generate features characterized in terms of information quality and source credibility. Real-world data samples are then collected from 4 OSNs, including Aditya's, Rotten Tomatoes, FilmCrave and Teaser Trailer, to conduct the prediction. The outcome of another important OSN (Twitter) on pic sales is also investigated [18]. Experimental results show that positive tweets are indeed associated with higher movie sales, whereas negative film comments usually reflect lower movie sales. (Consider that prediction models in [7, 16, 18] are based on the (logistic) regression model.) Some other classifiers, such every bit neural networks, are proposed in [19, 20]. The superior simulation results obtained demonstrate the efficacy of neural network-based models over the regression prediction.

Recommendation system

Motion-picture show recommendation systems, i of the most popular applications of UGC analysis, aim to suggest new movies to audiences based on their established preferences (generated from historical users' contents). Most existing recommendation systems fall into ii categories: collaborative filtering (CF) and content-based (CB) methods.

CF methods brand recommendations based on a group of users exterior the sample group with like film preferences. Given a user listing \(\mathcal {U}= \left\{ u_i | \forall u_i \in \mathcal {U}\right\}\), for any one target user \(u_i\), CF methods generate a sorted user list \(\widehat{\mathcal {U}}\), which satisfies the following weather:

-

\(\widehat{\mathcal {U}} \subset \mathcal {U}\), and \(u_i \notin \widehat{\mathcal {U}}\);

-

\(sim\left\{ u_j, u_i\right\} \ge sim\left\{ u_k, u_i\right\}\), subject that \(\forall u_j, u_k \in \widehat{\mathcal {U}}\) and \(j < k\).

The variable \(sim\left\{ u_j, u_i\correct\}\) represents the similarity betwixt user \(u_j\) and \(u_i\). By finding the almost similar user(s) to \(u_i\), the film recommendation is fabricated by aggregating the historical watching information from \(\widehat{\mathcal {U}}\). Examples of the CF-based movie recommendation system include [5, 21, 22].

By contrast, the CB recommendation organization takes into account picture show metadata such as picture show genres, actors, directors, and bones descriptions [23–25]. That is to say, the correlation betwixt movies is utilized as the key criteria for movie recommendations. Let the list \(\mathcal {M}= \left\{ m_i | \forall m_i \in \mathcal {M}\correct\}\) exist the previously reviewed film listing for the i-thursday user. Let the \(content(\cdot )\) function represent movie metadata, i.due east., a set of pre-defined attributes or features characterizing movies. Accordingly, CB methods estimate a user's (\(u_i\)) preference for any motion-picture show \(m_j\) (\(m_j \notin \mathcal {Yard}\)) based on its similarity with \(\mathcal {M}\):

$$\begin{aligned} sim\left\{ \mathcal {Chiliad}, m_j\right\} = \sum sim\left( content(m_i), content(m_j) \right) . \cease{aligned}$$

(ii)

More recently, some hybrid algorithms take been proposed to ameliorate the accuracy of recommendations. For instance, in [8], both CF and CB-based recommenders are employed in parallel. A K-nearest-neighbourhood algorithm is implemented to estimate similarity. Meanwhile, clustering algorithms are also combined with the CF method to grouping together similar movies earlier recommendation [26]. Li et al. farther suggest using a fuzzy Grand-means algorithm to cluster films with similar profiles [27]. These hybrid algorithms demonstrate their superiority over traditional recommendation systems by addressing bug such as data sparsity and common cold starting time.

Other studies

The UGC mining-based assay can too be applied to discover relevant knowledge and to meliorate determination-making processes for individuals and organizations. In this context, some examples of existing compages and processing ability include business intelligence, marketing, and disaster management. For example, in [28] the authors nowadays a marketing campaign approach to using Facebook UGC. A streaming model is established for predicting the number of visits, turn a profit or fifty-fifty return on investment (ROI) with respect to advertising elements. Another mechanism for analyzing the affect of ad is carried out in [29]. By analyzing raw contents such as timestamps, brand-term frequency and individual responses, the results reveal customer feelings nigh a brand, likewise as other economic and social variables impacting on a company. Furthermore, research in [30, 31] shows that gimmicky companies that take advantage of UGC assay seem to outperform their competitors, and report commercial benefits such as price effectiveness and improved efficiencies. In addition, the study in [32] proposes a two-model approach to examining post-disaster recovery using social media data. User generated content is firstly separated into active and passive perspectives, then a communication arbitration and cultivation model is practical. Analytical results demonstrate that social media creates positive effects in post-disaster recovery.

Summary

Despite the amount of enquiry devoted to UGC analysis, almost of the work conducted to appointment has focused on detail aspects of film contents. At the same time, little analysis has been conducted on Chinese civilization-based user contents, either from the point of view of users' demographic profiles or their language habits. More importantly, existing work is mainly conducted past using an average unmarried automobile or fifty-fifty transmission adding. Many challenges associated with data volumes, multifariousness, velocity and veracity still remain unresolved in conventional UGC analysis.

Taking all these aspects into account, the Douban-Learning framework is proposed equally an efficient Large Data processing tool for Chinese social media platforms, with the ability to cover information drove, feature extraction and content mining. The proposed framework has a number of advantages which increase its utility in real applications:

-

Dissimilar almost conventional methods, Douban-Learning facilitates massive data analysis;

-

Douban-Learning allows experts to apply multiple metrics to draw or explain features which can potentially yield results from multiple perspectives;

-

Douban-Learning produces belittling results using clan results, findings that are easily interpretable and conspicuously expressed for utilise in decision-making.

Douban-Learning framework

In this section, nosotros showtime provide some groundwork information on the written report surface area, including one famous Chinese OSN that is, Douban and its users' attitudes to South Korean (hereafter Korean) films. Douban film forums, which have been engaging with an increasing wave of Korean films and stars since the Korean and Chinese governments and their respective film industries began planning a co-production treaty in 2011, are by far the largest attribute of this OSN. Adjacent, the Large Information processing framework is introduced and the implementation of its three chief modules is discussed. The implemented quantification algorithm and Hadoop platform are too introduced here.

Douban'southward Boost to Korean Cinema

Since the late 1990s Korean picture palace has become one of the most dynamic national cinemas in the world. Korean films have made transnational connections across Asia and beyond, through their stylistic trends and experimentation with narrative and genres [33]. Given the fundamental function and importance that Korean cinema occupies on the global stage, information technology is unsurprising that a parallel phenomenon exists in the online world. In this paper we focus narrowly on Korean films and their influence on Douban, ane of the biggest interest-oriented Chinese OSNs. This social website attracts more than 100 million active visitors per calendar month, and has amassed over 65 million registered users. In 2015, it is accessed by over 30 % of Chinese Internet users, making this platform a major magnet for film fans beyond China. Douban users are able to disseminate their opinions on a wide range of these international films, and to make recommendations to their followers and friends. The upshot is the generation of vast quantities of self-interested user records.

However, documenting these public-available online records and mining them for useful information about Chinese movie audiences and their behavior toward international films is a challenging undertaking especially with a unmarried machine. Information technology involves the collection of massive amounts of data created by film reviewers, ranging from individual users to geographically based catchments that grow over time. Furthermore, to gain a comprehensive understanding of Chinese-based UGC in this expanse, a diverseness of factors must be considered, such as specific features of films and movie reviews, likewise every bit user profiles. In summary, the Douban OSN offers great potential of the Big Data application for analyzing Chinese UGC in ways that other studies such as [34] accept nonetheless to master.

To explore this speedily changing loonshit, nosotros selected a total of 114 Korean films released between 2003 and 2014. This subset includes the top x performing films in each year co-ordinate to Korean box office statistics, which are publically available on the Korean Film Quango online database [35]. Whilst these films were clearly popular among Korean fans, the case is non necessarily the same for Chinese fans, and thus this particular dataset offers a relatively unbiased opportunity to investigate the nature of their reception in user comments on Douban. (The open nature of the Korean and Chinese-Douban dataset and its potential for re-employ makes information technology possible for independent observers and readers to replicate and build upon the results discussed beneath.)

Douban-Learning framework

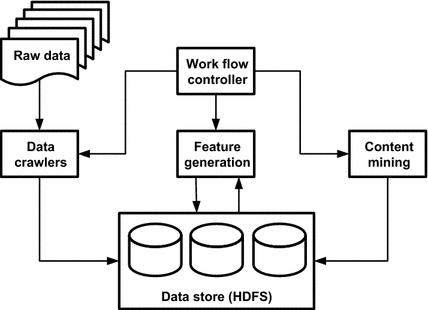

In this section, the Douban-Learning framework is proposed as a means of discovering disquisitional patterns of audience behavior in Chinese UGC. The proposed framework consists of three main stages or modules, which are summarized as follows:

- 1.

Information crawler module: raw information records are firstly collected and distributed via an asynchronous scraping crawler; all nerveless records are then uploaded to the Hadoop platform and stored on the HDFS.

- 2.

Characteristic generation module: this module is used to identify meaning attributes from nerveless raw samples and producing loftier-level features;

- 3.

Content mining module: an improved association rule-mining algorithm is implemented in this module, allowing u.s. to categorize and translate UGC with multiple heterogeneous features.

A more detailed description most private modules and their functionalities is presented below. The proposed Douban-Learning framework is also shown in Fig. 1.

Architecture of the Douban-Learning framework for film analysis

Data crawler

To begin with, a scraping crawler module is implemented in the first phase in our framework procedure to collect raw samples. Douban allows access to its data via public Awarding Programming Interfaces (APIs), while the samples that it provides are compressed in the JSON format. Despite the general availability of this information, a systematic process to eliminate redundant or unnecessary content via an asynchronous scraping crawler has been developed to overcome these constraints.

The scraping crawler module is then implemented to consist of 1 global controller and multiple workers, which are configured in different computer nodes. The controller is used to manage account details, distribute multiple IP addresses, keep runway of tasks, and audit condition reports. The workers, on the other hand, execute scraping tasks concurrently. More precisely, when the list of target movies has been determined, the controller generates a task queue and so randomly assigns the priority for different movies. Notation that films with a college priority will exist assigned to workers before those with a lower priority.

Next, an idle worker is initialized with a valid business relationship and IP accost for an assigned picture. The worker creates farther scraping threads for various contents from Douban. When the API constraint is practical, the worker is halted by creating a breaking point and recording the current status. The global controller will later reactivate halted workers until a pre-defined condition (here a particular fourth dimension catamenia) is satisfied. This distributing–working–waiting–reactivating procedure is recursively repeated for each worker until the assigned task is completed. The worker is then released and is ready for the next collection.

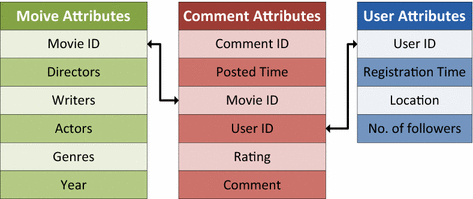

In this data drove stage, three categories of Douban contents are considered: motion picture details, review comments and user profiles. (The raw attributes and relationships between contents are represented in Fig. two). Collected samples are then distributed and stored separately according to the information category. That is, for a particular movie, all comments are recorded in one log file, while the related user profile is kept in another log document. The special symbol "\(\#\)" is used to split up raw attributes. Notation that a global file is created individually to store the bones flick details in the controller node, whereas review comments and user profile data are stored in worker nodes. Therefore, the results from the first stage of our framework are collected records, which are then uploaded to the Hadoop platform and stored on the HDFS.

Data structure and relationships between Douban contents–films, reviews and users

Feature generation

The 2nd stage in our framework involves the identification or extraction subjective information from raw samples, which we then catechumen into usable features. Appropriately, feature generation module is implemented in this phase using a Big Data extraction, transformation and load (ETL) tool (Hive [36]). A loftier-level characteristic tin be regarded equally a user-defined hierarchical representation of these initial raw attributes. In accordance with the record category, related characteristic lists are generated to encompass the aforementioned aspects: moving-picture show details, review comments, and user profiles (every bit summarized in Tabular array 1). Once more, these features are generated as they are typically found in English-language comments on most OSNs. Among these, some features can exist straight extracted from raw attributes, whereas others crave loftier-level assemblage.

To simplify the explanation, a normalization procedure is introduced showtime. Allow \(\mathcal {S}\) be a finite fix: \(\mathcal {S}= \left\{ s_i | \forall s_i \in \mathcal {Southward}\correct\}\). The normalized function \(\left\| \cdot \correct\|\) is divers every bit follows:

$$\begin{aligned} \left\| s_i \right\| = \frac{s_i}{\max \left( \mathcal {Due south} \right) }, \end{aligned}$$

(3)

where \(\max \left( \mathcal {Due south} \right)\) represent the maximal value from \(\mathcal {S}\). Herein the normalization procedure is implemented using the \(\max \left( \cdot \right)\) function from Hive.

For the raw film data, as shown in the get-go row of Table ane, annotation that the "genres" feature can be extracted from the original genre attribute. In terms of picture show rating (\(R_i\)) and popularity (\(P_i\)), they can exist measured as follows:

$$\begin{aligned} R_i= \left\| \frac{\sum _{j=i}^{c_i}r_{i,j}}{c_i} \right\| , \terminate{aligned}$$

(4)

$$\begin{aligned} P_i= \left\| c_i \right\| , \end{aligned}$$

(5)

where \(r_{i,j}\) represents the rate received from the j-th comment to the i-th film, and \(c_i\) is the number of full comments for the i-th picture.

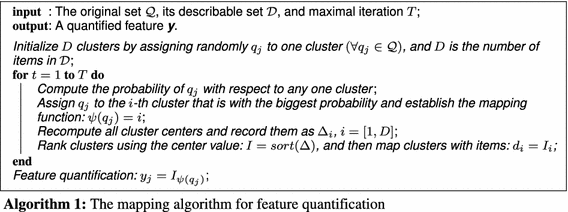

The calculations used in Eqs. (4) and (5) reflect two basic assumptions: start, the average motion-picture show rating is strongly associated with the ratings drawn from individual comments; second, the more comments a pic receives, the more than popular it is rated. The trouble with this binary approach to feature measurement is that both outcomes from Eqs. (4) and (5) are continuous and not operable in real-earth applications. Therefore, to quantify extracted features, the definition of a describable set up is set out equally follows:

Definition i

Let \(\mathcal {Q}= \left\{ q_1, q_2, \cdots , q_Q\right\}\) be a Q-item set for a particular domain \(\psi\). Its describable fix \(\mathcal {D}= \left\{ d_1, d_2, \cdots , d_D\right\}\) is a D-item set that satisfies the post-obit conditions:

-

\(d_i\) \((\forall d_i \in \mathcal {D})\) is a linguistic label that used to depict a certain status of \(\psi\);

-

\(\mathcal {D}\) has less items than \(\mathcal {Q}\), i.e., \(Q < D\);

-

\(\psi (q_j) = d_i\), subject to \(F(q_j, d_i) < F(q_j, d_k)\) \((\forall d_i, d_k \in \mathcal {D}\), \(i\ne 1000)\), where \(\psi (\cdot )\) is a description role that mapping \(q_j\) to an unique item \(d_i\), and \(F(\cdot )\) is a distance mensurate part (For simplicity, the Euclidean distance is employed herein).

As the definition shows, at that place exists a many-to-one relationship between the original and describable sets; that is, ane item from \(\mathcal {D}\) is used to draw or map more than one particular in \(\mathcal {Q}\). At the same fourth dimension, each particular from \(\mathcal {D}\) is a linguistic label to describe a unique status of \(\psi\). Nosotros farther assume that these items (labels) are sorted in a item order of status. A possible describable set for movie ratings, for instance, could be: \(\mathcal {D}= \left\{ loftier, medium, low\right\}\). And a typical set for describing film popularity is \(\mathcal {D}= \left\{ popular, medium, unpopular\right\}\).

Notation that domain knowledge for \(\psi\) is required to decide statuses ranging from high to low, and then to represent each status with one item in \(\mathcal {D}\). Unlike business organization or operational requirements may outcome in a variety of statuses, thereby producing various describable sets. The advantage of the describable set is to convert continuous data using discrete labels, thereby enhancing business concern operation and agreement of the significant trends present in UGC.

In full general, a mapping algorithm is introduced to quantify continuous features by representing them with the describable ready; run across Algorithm 1. Later, this mapping algorithm is applied to quantify film features, such equally movie ratings (\(R_i\)) and popularity (\(P_i\)), respectively.

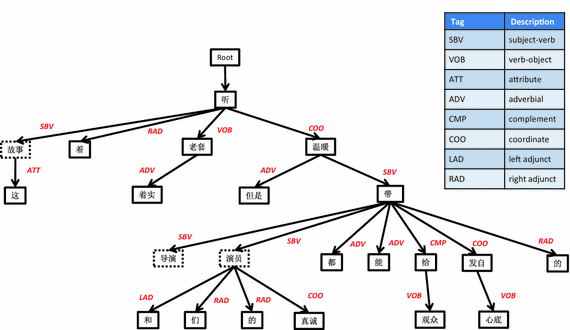

The next step in the feature generation phase is to excerpt informative features from film reviews (as shown from the second row in Tabular array 1). To this end, the word sectionalisation and sentiment analysis technique is implemented. The give-and-take segmentation technique involves the division of complete sentences into their syntactical and semantic components. Whereas in English language a space is usually used to separate words, Chinese has a very different structure whereby give-and-take division is either non-existent or operates in unlike ways. Thus, any procedure adopted for Chinese word sectionalisation must consider the specific linguistic communication habits of Chinese users.

In this newspaper, nosotros utilize the LTP-Cloud service [37] for Chinese word segmentation. The LTP-Cloud service is an open-sourced Chinese language processing service, including part-of-speech tagging, named entity recognition, and and then on. In addition, for each specific picture show studied, multiple corpora were also manually created to place player, director, writer, and story, with each category designed to elicit information about a particular aspect of the picture. Further details can be found in our preliminary research findings [38, 39].

Some general keywords used in these corpora are shown in Table 2. Note that, in practice, the actual names of actors, directors, or writers for specific movies are included also. Discussion segmentation is then carried out on the ground of these corpora. In adopting this approach, our analysis of the dataset constitutes a major advance on previous studies, which rely either on data available in English-linguistic communication sources (which present fewer bug than a Chinese-language dataset) or are restricted to a single, and thus limited, dictionary of Mandarin terms.

Based on the give-and-take segmentation exercise, four high-level features (i.e., "actor", "managing director", "writer", and "story") are generated, reflecting the incidence of keywords from four corpora in a unmarried review. Every bit an example, the word segmentation result from the user comment "

" ("This story sounds old fashioned, merely the director and actors' sincerity can be felt past the audience") is shown in Fig. 3. The words or phrases isolated within each box represent the word sectionalisation stage, while the matched keywords for the related corpus are institute in the relevant boxes with dotted lines. Note that, for this case, a sequence of ane, one, and i keyword is matched to the "actor", "director", and "story" corpora, respectively. Thus, the related four-feature vector is represented as: (1, 1, 0, ane), as no writer keywords were found.

Word division for a single user comment

Moreover, the sentiment analysis method in [twoscore] is employed in this newspaper to allocate the sentiment from comments. And so outcomes from sentiment assay are taken as the value of the review "emotion" feature. At the aforementioned time, for the "review rating" feature, Eq. (6) is employed to convert the raw "Rating" attribute into a binary feature:

$$\begin{aligned} CR_{i,j}=\left\{ \begin{array}{ll} 1, & \quad \text {if }\quad r_{i,j} > R_i \\ 0, & \quad \text {if }\quad r_{i,j} \le R_i, \end{assortment} \right. \end{aligned}$$

(6)

where \(r_{i,j}\) is the rating received from the j-th annotate to the i-thursday flick, and \(R_i\) represents the average rating for the i-th film (encounter Eq. (4)). That is, individual ratings are divided into ii groups according to their relationship to the boilerplate picture show rating. This binary approach to converting the rating attributes aids the processes in this preliminary study by simplifying the ratings every bit 1 of the key feature categories (listed in Table three). Later, to quantify the six review features discussed above, Algorithm 1 is over again employed.

For the user profile, four features (again shown in Table 1) are generated to simulate a given user's interests and behavior location, activeness, membership elapsing, and leadership. Among these, "location" is a spatial feature derived from users' demographic information. However, the original location attribute collected is at the city level. Nosotros then develop an aggregation part in Hive to map users either from city to province (for Chinese users), or from city to land (for international users).

Furthermore, to measure other user'south features, such as activity, membership duration, and leadership, the following definitions are proposed:

-

The "activity" characteristic is computed from users' previous viewing lists. That is, the more Korean films watched by a user, the college their action level;

-

The "membership duration" feature is used to measure out the period of time elapsed since a user'southward registration. Thus, the earlier the date of registration with Douban, the longer the time-bridge;

-

The "leadership" feature is to gauge the amount of influence exerted by a given user, which is related to the number of followers gained by a user since registration.

Let \(u_i\) exist the full number of watched Korean films, \(d_i\) the registration appointment, and \(f_i\) the current number of followers for the i-thursday user, respectively. Based on three assumptions, the features of activity (\(A_i\)), membership duration (\(S_i\)), leadership (\(L_i\)) for user \(u_i\) can exist estimated as follows.

$$\brainstorm{aligned} A_i = \left\| u_i \correct\| , \finish{aligned}$$

(7)

$$\brainstorm{aligned} S_i = \left\| engagement(d_i, T_{now}) \right\| , \terminate{aligned}$$

(8)

$$\begin{aligned} L_i = \left\| \frac{f_i}{ date(d_i, T_{now}) } \right\| , \end{aligned}$$

(9)

where \(T_{now}\) is a constant for current engagement, and the part \(date(\cdot )\) is used to compute the number of days from \(d_i\) to \(T_{now}\). Every bit before, Algorithm 1 is applied to quantify the continuous features.

In summary, by combining the aggregation office and proposed quantification algorithm, the feature generation module produces high-level features in the second stage of our proposed framework. A total of xiii features are produced from the raw samples representing three categories of contents on Douban: moving-picture show, review and user. Table 3 shows the concluding consequence of the generated features.

Content mining

In the 3rd and concluding stage of the Douban-Learning framework, we volition observe out significant correlations and excerpt new knowledge from high-level features in Table 3. Thus, the content mining module is implemented herein using association dominion mining.

Rule mining is ane of the about popular data mining tools used for such purposes due to its simplicity and efficiency. In full general, association rules are used to describe dependence or correlation among features (or items). Thus, an association rule-based algorithm was considered for this module. A typical dominion takes the form \(\mathcal {A}\longrightarrow \mathcal {C}\), where \(\mathcal {A}\) and \(\mathcal {C}\) stand for the antecedent and consistent set up of the rule, respectively, and \(\mathcal {A}\cap \mathcal {C} = \emptyset\). The rule implies that all items from \(\mathcal {A}\) accept a high probability of being associated with items from \(\mathcal {C}\). Support, conviction, and lift are critical measurements for evaluating association rules, which are defined as follows [41]:

Definition 2

Given N data records, the back up of \(\mathcal {A}\) (\(supp\left( \mathcal {A}\correct)\)) is the proportion of records which incorporate all items from \(\mathcal {A}\), which can be computed as follows:

$$\brainstorm{aligned} supp\left( \mathcal {A}\correct) = \frac{ \left| \mathcal {A} \correct| }{N}, \stop{aligned}$$

(ten)

where \(\left| \mathcal {A} \correct|\) is the number of records contains \(\mathcal {A}\). The confidence of the rule \(\mathcal {A}\longrightarrow \mathcal {C}\) is computed equally:

$$\begin{aligned} conf\left( \mathcal {A}\longrightarrow \mathcal {C} \correct) = \frac{supp\left( \mathcal {A\cup C}\right) }{supp\left( \mathcal {A}\right) }. \end{aligned}$$

(eleven)

The lift of a rule \(\left( \mathcal {A}\longrightarrow \mathcal {C}\right)\) is the ratio betwixt back up and confidence, which tin exist computed as follows:

$$\brainstorm{aligned} lift\left( \mathcal {A}\longrightarrow \mathcal {C} \correct) = \frac{conf\left( \mathcal {A}\longrightarrow \mathcal {C} \right) }{supp\left( \mathcal {C} \correct) }. \finish{aligned}$$

(12)

The measurements for support and confidence of rules reflects the detail frequency, while elevator is used to bank check the flexibility of rules (or the interestingness of rules [42]).

Nevertheless, traditional association rule mining algorithms, such as Apriori, encounter many applied problems when processing big data sets. For instance, Apriori employs a "bottom-up" strategy to produce different levels of frequent-item sets. In addition, it requires repeated scanning of the entire dataset until all possible combinations of frequent sets are found. This typically leads to big-scale or high-dimensional results that exceed the processing capacity of a single computer. As a result, some improvements have been proposed to facilitate Apriori parallelization using MapReduce.

MapReduce is an effective process in the Hadoop platform for parallel calculating and Big Information processing. A MapReduce procedure consists of two master phases, map and reduce. In the map phase, the input cardinal-value pairs are processed individually by a map role and produce a 2nd set of intermediate key-value pairs. The new pairs are then clustered according to their keys and provided as the input for the reduce office in the reduce stage. A third ready of key-value pairs is and so derived as the final output.

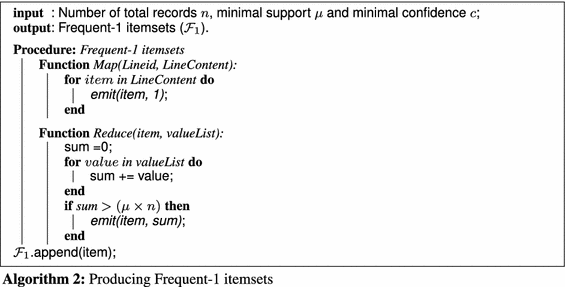

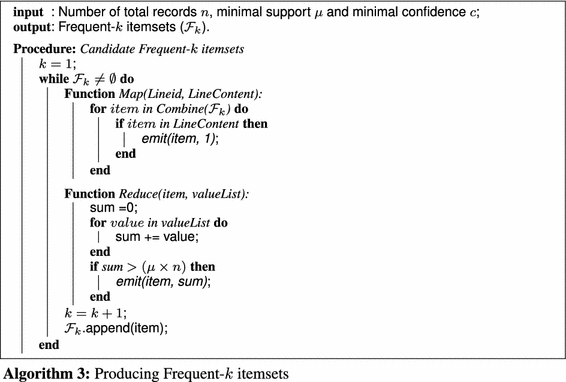

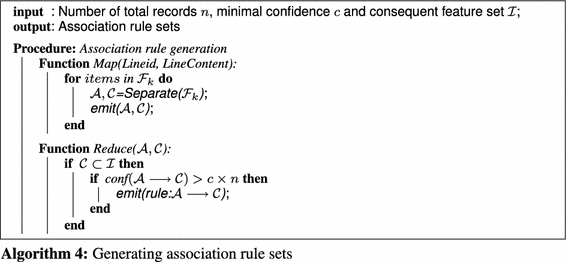

MapReduce-based processes dramatically improve the reliability and efficiency of conventional Apriori algorithms [43, 44]. However, a major limitation is that a large number of candidate clan rules are generated, with many antecedent and/or consequent conditions. These conditions are neither easy to process (due to the large number of rules), nor straightforward to understand or interpret due to their complexity. To solve this problem, in this newspaper we limit the category of features contributing to the consequent set. That is, only some features (to exist pre-divers past experts) are permitted to be included in the rule consequence process. To this terminate, a three-step parallel Apriori algorithm is proposed, of which is shown from Algorithm 2, Algorithm 3 and Algorithm 4, respectively. The proposed Apriori algorithm first applies the MapReduce process to generated Frequent-1 itemsets (stride ane) and candidate Frequent-k itemsets (step ii), respectively. The association dominion sets are then obtained by combining frequent itemsets (footstep 3) as the support-conviction framework [45]. That is, rules satisfied minimal support and minimal confidence are selected. Furthermore, we also add a constraint by limiting the characteristic category in the consequent set. Therefore, the valid rules from the proposed Apriori algorithm will be a subset of the full rules. Again, this constraint is used to reduce the huge number of rules for better interpretation.

Experimental results

This section presents experimental results following application of the improved Apriori algorithm to features extracted for content mining. The cloud infrastructure employed is presented in "Cloud infrastructure", and the experimental setup and data sets are presented in "Experimental setup". The operation of the proposed framework is then evaluated in "Performance assay".

Cloud infrastructure

A virtual cluster is a simple but fast environment in which to build up the Hadoop framework. In the cloud infrastructure we implemented, a Dell server with Intel Xeon E5-2630 1.8 GHz cores and 32G memory is employed. A virtual cluster consisting of four nodes is and so deployed. For each node, two virtual CPU and 4GB of memory is allocated. In add-on, i node is fix as the master motorcar for Hadoop, while the remainder are used as slaver nodes. In improver, for the Hadoop platform, the 2.5 version is installed. At the same time, the global controller for the data crawler is deployed in the same primary machine as Hadoop, while workers are distributed to the slaver nodes. The Hive [36] tool is as well implemented in the platform to pre-process raw data samples.

Experimental setup

Table 4 shows the summary statistics for the data harvested from Douban for 114 Korean films until April 2015. During this period, a full of 714,946 comments are collected from 228,806 singled-out users. Each motion picture received, on average, around 6271 comments. In add-on, of the 714,946 comments, 54,939 were made without allocating a rating. These statistics resulted in an average film rating of 3.7 (on a range of ane–5) for all films.

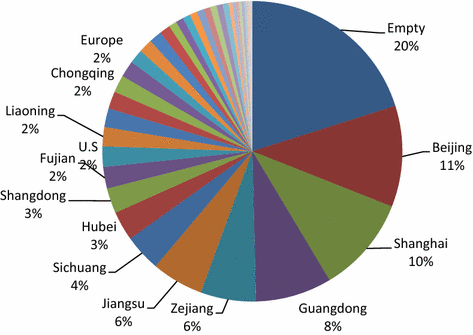

Effigy iv illustrates the geographic distribution of users who commented on the 114 Korean films in the study. Nigh 80 % of users left location data, which for Chinese nationals is identified at the provincial level, including the municipalities of Beijing, Tianjin, Shanghai, and Chongqing (treated as provinces), while overseas users are labelled past their country of origin. Unsurprisingly, about Chinese users were located in developed cities and provinces such every bit Beijing, Shanghai, Guangdong, Zejiang, and Jiangsu, while users from the U.S. formed the largest single overseas group. Generally speaking, in these centres the user experience is enhanced past greater admission to faster Cyberspace speeds and a sophisticated public infrastructure for flick exhibition. Therefore, the data illustrates pregnant participation from a greater number of users from these areas.

Geographic distribution of users commenting on the 114 Korean films in the study

Operation assay

This section deals mainly with the effect of the minimum support threshold (\(\mu\)) on the performance of the improved Apriori algorithm. A smaller value for \(\mu\) is more prone to generating more rules than a larger value for \(\mu\). However, a vast number of rules is difficult to sympathize and procedure, non to mention the computational costs involved. Our aim was then to exam the robustness of the proposed algorithm (in particular the execution time and number of rules generated) according to various parameters. To this end, the affect of the minimum support threshold was considered past using dissimilar values for \(\mu\) i.e., \(\mu\) was set at x, 20, 30, 40, and 50 %, respectively, while minimum confidence c was ready at 50 %. In other words, any rules satisfied \(\mu\) and c were selected.

Furthermore, in the proposed rule-mining algorithm, all features associated with films, reviews and users are included in the antecedent set; while flick and review features are selected for the consequent characteristic set, with a view to understanding what factors might result user comments or their preference. The proposed dominion-mining algorithms are then applied to the total data set. The full data samples contain 660,007 comments (comments without allocating ratings are removed). For each comment, 13 features are extracted as mentioned in Table 3. Therefore, a full (660,007 \(\times\) 13) sample matrix is formed to represent the full data set. Apart from the proposed Apriori algorithm, nosotros as well benchmarked it with other algorithms for mining clan rules, including traditional Apriori [46], Eclat [47], MapReduce-based Apriori (MRA) [48], and Spark-based Apriori (SBA) [49]—whose implementations are summarized at [50]. Both traditional Apriori and Eclat algorithms are single automobile-based, which utilise the generated-and-test mining strategy. On the other manus, MRA and SBA utilise the state-of-fine art cloud computing technologies. In particular, SBA is a cutting-edge algorithm based on Spark [51], in which data tin can be processed and cached in the motorcar retention.

Table 5 shows comparisons between five mining algorithms in terms of the number of rules extracted. Every bit observed, with a decrease in the minimum support threshold, more than rules are generated. For instance, the proposed Apriori algorithm produced 581 and 12,466 rules for \(\mu =\) 50 % and 10 %, respectively. Meanwhile, the proposed Apriori algorithm generated the minimal number of rules in 5 cases. That is considering we only allowed certain features to form the consequent fix, thereby eliminating unnecessary rules for easier interpretation. On the other hand, both traditional Apriori and Eclat algorithms failed to generate association rules for any case of \(\mu \le\) 40 %. That is, with a sample matrix of the (660,007 \(\times\) 13)-dimension from the given problem, the corporeality of information exceeds the processing capacity of the traditional Apriori and Eclat techniques using a unmarried machine, which leads to significant computational cost and all-encompassing memory requirements.

In addition, Table 6 shows comparisons between execution times for unlike algorithms. The reported execution time reflects the entire mining process including loading data, rules mining, and the generation for output reporting. Unsurprisingly, the traditional Apriori and Eclat algorithms required much more time than other cloud computing-based mining algorithms when a huge data set is presented. When \(\mu =\) 50 %, for instance, the computational time for traditional Apriori and the proposed algorithm was approximately v days and 85.two s, respectively. The much longer processing time recorded for traditional Apriori is related to the procedures for generating frequent itemsets. Again, both traditional Apriori and Eclat approaches fail to solve the problems of \(\mu \le\) 40 %. In contrast, all parallel mining algorithms perform stably in terms of execution time. All association rules are found approximately 100 s. Furthermore, the proposed algorithm performs meliorate than MRA by taking the less computational time, indicating its flexibility and suitability for Big Data mining investigated herein. In improver, the boilerplate execution time for the proposed (84.02 south) is slightly slower than the SBA algorithm (82.84 s). One reason for this is the SBA approach is processing the data within the motorcar retention, while the proposed algorithm is applying MapReduce on deejay. The implementation of the proposed algorithm using Spark volition meliorate its performance, and thus we leave this attribute of our piece of work for futurity research.

Discussion

For generated association rules, we are more interested in rules with high lift (as divers in Eq. (12)), a measurement which reflects the interest value of rules [42]. Herein we summary some rules with loftier lift in Table 7. The minimum support threshold \(\mu\) and confidence c were set at 30 and l % respectively.

A few observations tin be made: Outset, in terms of pic features, there is a notable absence of meaning rules that relate to a picture'due south rating, popularity, or to a specific director or actor. This may be because the specific cohort under investigation at this time pays less attention to a commercial picture'due south production context (for reasons that could be explored in a farther study). Second, still, picture show genre appears to be a pregnant characteristic (see Dominion 1). The romance–drama (

) had the widest entreatment among this particular film customs. This mirrors the fact that before long the largest percentage of films made in China are romance dramas.

These 2 observations have meaning implications for the hereafter of China's feature pic industry given that since China joined the WTO in 2001, cooperation between the Chinese and Korean film industries has drawn them and their fans closer together. This relationship has blossomed via a handful of policy-driven co-produced romance dramas. It has also resulted in a much larger number of informal collaborations, including other genre films fabricated by Chinese companies using Korean visual effects firms, strange cast and crews, and shooting scenes on location in ane or both countries. As a result of these "willing collaborations", Korean films now loom big among Douban users. In short, Douban users disseminate their opinions on a wide range of these international collaborative films, and to brand recommendations to their followers and friends, thus potentially influencing film culture on a wide-scale.

Third and finally, in terms of user profiles, we discovered that there is an absence of appreciable and significant rules relating to "location" and "leadership" (run across Rules 2 and 3). Medium users (i.e., membership elapsing is medium) are more than interested in the genre and therefore respond with positive comments. A user'due south established preference for Korean films (i.due east., activeness is high) also leads to positive comments on new Korean films, although this grouping generally cares less about a film's director and more than nigh its bandage and story.

With these three observations in mind, the framework for our study could potentially be extended beyond Large Data processing non only of text-based film-related content generated past a diverseness of international users commenting on other country's films across different social network systems, but to cover reams of data generated by side by side-generation media sources. For example, in the not-too-distant future, additional sources of user/customer sentiment analysis are likely to include real-fourth dimension interactions experienced via new Hybrid Circulate Broadband Television receiver (HbbTV) platforms which volition effectively remove the spotlight from online text-based social networking sites such as Douban. The ability to directly engage interactive feedback from audiences via multiple consoles, smart TVs and smart devices, for case, will provide a just-in-time capacity to both content producers and also the traditional distribution channels that are currently the discipline of social media sentiment analysis. Having said this, we trust that the preliminary methods and tools explored in our present report volition underpin some of the new enquiry initiatives on the horizon. The ability to process the types of structured and unstructured data at volume and calibration derived from Douban and other SNS platforms is presently giving birth to a new field, whatever its future might be. Harvested at scale beyond multiple languages, platforms and geographies, eWOM is already being aggregated and utilized past Western media pioneers like NetFlix and HBO in their content-generation and media strategies. Giant Chinese online and mobile video services such every bit Sohu, Youku, Sina Video, Tencent Video, and LeTV where Big Data harvesting and assay is prevalent are surely heading in a similar direction. Perhaps some of the exploratory lessons offered here will provide them with additional nutrient for thought.

Decision

In this paper, we propose a circuitous framework for Big Information processing that tin can not be achieved with a single-auto utilizing average hardware and software. Three modules are introduced that are capable of crawling raw online records, generating key features to correspond original samples in useful ways, and and so running an clan dominion-mining algorithm on clouds for further content mining.

The proposed framework is implemented using the cutting-edge Hadoop platform, which is used equally the fundamental tool for storing and processing harvested data sets. Thirteen high-level features are generated from three categories (motion-picture show details, reviews, and user profiles) using aggregation functions, and the information is further quantified using the description set. More importantly, an improved parallel Apriori algorithm is proposed to notice significant correlations among these thirteen key features, with a view to expanding the analytical methods to a larger data ready, that is, all movie (or other popular civilization) comments on Douban, and/or other time to come-generation OSNs.

In the wake of this preliminary and somewhat novel study, the proposed framework offers a flexible capability and efficient applicability for the processing of large amounts of social media data that in plow can be fed back to producers and distributors of both commercial and user-generated digital media contents.

Research on mining user-generated content, however, is still in its infancy, and therefore progress in this exciting arena must proceed. The enquiry piece of work presented in this newspaper has only investigated a pocket-sized area using big data techniques. There are many possibilities for hereafter research directions and improvements, including the implementation of content mining using other rule mining algorithms (such every bit the Frequent Design Growth (FP-Growth) strategy), as well every bit using Spark or Apache Tez platform to achieve amend functioning. Ane of very side by side tasks is to investigate users' network structure to identify leadership links and trends among Douban'southward expansive user and follower networks.

References

-

Mayer-SchÖnberger V, Cukier Grand. Large data: a revolution that will transform how nosotros live, work and recollect. New York: Houghton Mifflin Harcourt Publishing Visitor; 2013.

-

Koh NS, Hu Northward, Clemons EK. Do online reviews reverberate a product's true perceived quality? an investigation of online movie reviews across cultures. Electron Commer Res Appl 2010; nine(5):374–85 (Special Section on Strategy, Economics and Electronic Commerce).

-

Singh VK, Piryani R, Uddin A, Waila P. Sentiment analysis of movie reviews: A new feature-based heuristic for aspect-level sentiment classification. In: International Multi-Conference on Automation, Computing, Communication, Control and Compressed Sensing (iMac4s), 2013; p. 712–seven.

-

Yao R, Chen J. Predicting film sales revenue using online reviews. In: IEEE International Conference on Granular Computing (GrC), 2013; p. 396–401.

-

Chang A, Liao J-F, Chang P-C, Teng C-H, Chen One thousand-H. Application of artificial immune systems combines collaborative filtering in picture recommendation system. In: International Briefing on Reckoner Supported Cooperative Work in Design (CSCWD); 2014. p. 277–82.

-

Samad A, Basari H, Burairah H, Ananta GP, Junta Z. Stance mining of motion picture review using hybrid method of support vector machine and particle swarm optimization. Procedia Eng. 2013;53:453–62.

-

Yu Ten, Liu Y, Huang Ten, An A. Mining online reviews for predicting sales performance: a case study in the movie domain. IEEE Trans Knowl Data Eng. 2012;24(4):720–34.

-

Amolochitis E, Christou It, Tan Z-H. Implementing a commercial-force parallel hybrid movie recommendation engine. IEEE Intell Syst. 2014;29(ii):92–6.

-

Zang W, Zhang P, Zhou C, Guo L. Comparative study between incremental and ensemble learning on data streams: case report. J Big Data. 2014;one:1–5.

-

Liu 10, Wang Ten, Matwin S, Nathalie J. Meta-mapreduce for scalable data mining. J Big Data. 2015;ii(1):xiv.

-

Liu C-L, Hsaio Westward-H, Lee C-H, Lu G-C, Jou E. Movie rating and review summarization in mobile environment. IEEE Trans Syst Homo Cybern Part C Appl Rev. 2012;42(3):397–407.

-

Wong FMF, Sen S, Chiang M. Why watching movie tweets won't tell the whole story? In: Proceedings of the 2012 ACM Workshop on Workshop on Online Social Networks, New York, NY, U.s.; 2012. p. 61–half dozen.

-

Singh VK, Piryani R, Uddin A, Waila P. Sentiment analysis of movie reviews and weblog posts. In: IEEE 3rd International Advance Computing Briefing (IACC); 2013. p. 893–98.

-

Hodeghatta UR. Sentiment analysis of hollywood movies on twitter. In: IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM); 2013. p. 1401–four.

-

Mouthami Thousand, Devi KN, Bhaskaran VM. Sentiment analysis and nomenclature based on textual reviews. In: International Conference on Information Communication and Embedded Systems (ICICES); 2013. p. 271–6.

-

Gupta North, Abhinav KR. Fuzzy sentiment assay on microblogs for motion-picture show acquirement prediction. In: International Conference on Emerging Trends in Advice, Command, Signal Processing Calculating Applications (C2SPCA); 2013. p. i–4.

-

Yeap JAL, Ignatius J, Ramayah T. Determining consumers' most preferred ewom platform for movie reviews: a fuzzy analytic hierarchy procedure approach. Comput Homo Behav. 2014;31:250–8.

-

Rui H, Liu Y, Whinston A. Whose and what chatter matters? the issue of tweets on movie sales. Decision Support Syst. 2013;55(iv):863–lxx.

-

Delen D, Sharda R. Predicting the financial success of hollywood movies using an data fusion arroyo. Indus Eng J. 2010;21(ane):thirty–7.

-

Ghiassi Chiliad, Lio D, Moon B. Pre-production forecasting of movie revenues with a dynamic artificial neural network. Expert Syst Appl. 2015;42(6):3176–93.

-

Barrio JB, Rubio XA. Geolocated picture show recommendations based on expert collaborative filtering. In: Proceedings of the 4th ACM Briefing on Recommender Systems, New York, NY, USA; 2010. p. 347–8.

-

Singh V, Mukherjee Chiliad, Mehta Grand. Combining collaborative filtering and sentiment classification for improved picture recommendations. In: Multi-disciplinary Trends in Bogus Intelligence. Lecture Notes in Informatics, vol. 7080; 2011. p. 38–50.

-

Kawase R, Nunes BP, Siehndel P. Content-based picture show recommendation within learning contexts. In: International Briefing on Advanced Learning Technologies (ICALT); 2013. p. 171–3.

-

Nessel J, Cimpa B. The movieoracle-content based picture recommendations. In: International Briefing on Web Intelligence and Intelligent Agent Technology (WI-IAT), vol. 3; 2011. p. 361–64.

-

Dumitras A, Haskell BG. Content-based moving-picture show coding—an overview. In: IEEE Workshop on Multimedia Signal Processing; 2002. p. 89–92.

-

Wang Z, Yu 10, Feng N, Wang Z. An improved collaborative movie recommendation system using computational intelligence. J Visual Lang Comput. 2014;25(6):667–75.

-

Li Q, Kim BM. Clustering approach for hybrid recommender system. In: Proceedings of IEEE/WIC International Briefing on Web Intelligence; 2003. p. 33–8.

-

Trattner C, Kappe F. Social stream marketing on facebook: a instance written report. Int J Soc Humanist Comput. 2013;2(i):86–103.

-

Jansen BJ, Zhang M, Sobel K, Chowdury A. Twitter power: tweets as electronic word of oral fissure. J Am Soc Inf Sci Technol. 2009;threescore(11):2169–88.

-

Harri AL, Rea A. Web 2.0 and virtual world technologies. J IS Educ. 2009;twenty(2):137–44.

-

Eisenfeld B, Fluss D. Contact centres in the web two.0 world. CRM Magazine. 2009;13(2):48–nine.

-

Cheng JW, Mitomoa H, Otsukab T, Jeonc Due south. The furnishings of ict and mass media in post-disaster recovery—a two model case study of the great east japan convulsion. Telecommun Policy. 2013;39(six):515–32.

-

Yecies B. Inroads for cultural traffic: breeding korea'due south cinematiger. In: Black D, Epstein S, Tokita A, editors. Complicated currents: media product, the Korean wave, and soft power in East Asia. Melbourne: Monash University EPress; 2010.

-

Nagwani NK. Summarizing large text collection using topic modeling and clustering based on mapreduce framework. J Large Data. 2015;2(1):6.

-

https://www.kobis.or.kr/kobis/business/principal/principal.exercise.

-

https://hive.apache.org/.

-

http://www.ltp-deject.com/intro/en/.

-

Yecies B, Yang J, Berryman M, Soh K. Marketing bait (2015) Using smart data to identify eastward-guanxi among china'south 'internet aborigines'. In: Film Marketing into the twenty-first century. British Film Found.

-

Yecies B, Yang J, Berryman M, Soh K. Korean female writer–directors and smart analysis of their reception on china's social media scene. In: Women Screenwriters: An International Guide. Palgrave Macmillan (forthcoming)

-

Yuan C, Zhuang Y, Li H. Semantic based chinese sentence sentiment assay. Int Conf Fuzzy Syst Knowl Discov (FSKD). 2011;4:2099–103.

-

Zhang C, Zhang S. Association rule mining: models and algorithms. In: Lecture Notes in Information science; 2307. Lecture Notes in Artificial Intelligence, New York: Springer, C2002. 2002.

-

Brin South, Motwani R, Jeffrey D. Dynamic itemset counting and implication rules for marketplace basket data. In: Proceedings of the ACM SIGMOD International Briefing on Management of Information; 1997. p. 265–76.

-

Lin K-Y, Lee P-Y, Hsueh Due south-C. Apriori-based frequent itemset mining algorithms on mapreduce. In: Proceedings of the 6th International Briefing on Ubiquitous Information Direction and Communication. ICUIMC '12; 2012. p. 1–8.

-

Li N, Zeng Fifty, He Q, Shi Z. Parallel implementation of apriori algorithm based on mapreduce. In: 13th ACIS International Briefing on Software Engineering science, Artificial Intelligence, Networking and Parallel Distributed Calculating (SNPD); 2012. p. 236–41.

-

Agrawal R, Imielinski T, Swami A. Database mining: a functioning perspective. IEEE Trans Knowl Data Eng. 1993;5(6):914–25.

-

Agrawal R, Srikant R. Fast algorithms for mining clan rules. In: Proceedings of the 20th International Conference on Very Big Databases; 1994. p. 487–99.

-

Liu Fifty, Li E, Zhang Y, Tang Z. Optimization of frequent itemset mining on multiple-core processor. In: Proceedings of the 33rd International Conference on Very Large Databases; 2007. p. 1275–85.

-

Othman Y, Osman H, Ehab East. An efficient implementation of apriori algorithm based on Hadoop–Mapreduce model. Int J Rev Comput. 2012;12:59–67.

-

Qiu H, Gu R, Yuan C, Huang Y. Yafim: a parallel frequent itemset mining algorithm with spark. In: Proceedings of the 2014 IEEE International Parallel & Distributed Processing Symposium Workshops; 2014. p. 1664–71.

-

https://jackyanguow.wordpress.com/2015/xi/04/source-codes-for-parallel-association-rule-mining/.

-

https://spark.apache.org/.

Authors' contributions

JY and Past are the master researchers for the work proposed in this paper. JY'south contributions include collecting information, initial drafting of the commodity, and coding implementation. Past plays an important role in providing the groundwork investigation and editing the article. Both authors read and canonical the last manuscript.

Acknowledgements

This article draws on research currently beingness conducted in association with an Australian Research Council Discovery projection, Willing Collaborators: Negotiating Change in East Asian media production DP 140101643.

Competing interests

The authors declare that they have no competing interests.

Writer information

Affiliations

Corresponding author

Rights and permissions

Open Admission This commodity is distributed under the terms of the Artistic Eatables Attribution four.0 International License (http://creativecommons.org/licenses/by/iv.0/), which permits unrestricted use, distribution, and reproduction in whatsoever medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and betoken if changes were made.

Reprints and Permissions

About this article

Cite this article

Yang, J., Yecies, B. Mining Chinese social media UGC: a big-data framework for analyzing Douban moving-picture show reviews. Periodical of Big Data 3, three (2016). https://doi.org/10.1186/s40537-015-0037-9

-

Received:

-

Accepted:

-

Published:

-

DOI : https://doi.org/x.1186/s40537-015-0037-9

Keywords

- Social media

- User-generated content

- Large data analytics

- Content mining

- Parallel clan-rule mining

longoriawrour1951.blogspot.com

Source: https://journalofbigdata.springeropen.com/articles/10.1186/s40537-015-0037-9

0 Response to "Mass Effect Art and the Internet in the Twentyfirst Century Douban"

Post a Comment